Terraform Tutorial For Beginners Your First Iac Project

#terraform-tutorial-for-beginners#Terraform-IaC#DevOps-Basics#Cloud-Infrastructure#AWS-Terraform

November 11, 2025

This Terraform tutorial for beginners is all about showing you how to manage cloud infrastructure using code. We're going to take what used to be a complex, manual setup process and turn it into simple, repeatable text files. You'll learn the core workflow - write, plan, and apply - and by the end, you'll be building real resources in the cloud, even if you're starting from scratch.

Why Terraform Is a Game Changer for Cloud Infrastructure

Before we jump into writing code, it's worth taking a moment to understand why a tool like Terraform even exists. Not long ago, setting up servers, databases, or networks meant hours of clicking through a cloud provider's web console. This old way of doing things is not only slow but also a recipe for human error, and good luck trying to replicate that setup perfectly every single time.

Before we jump into writing code, it's worth taking a moment to understand why a tool like Terraform even exists. Not long ago, setting up servers, databases, or networks meant hours of clicking through a cloud provider's web console. This old way of doing things is not only slow but also a recipe for human error, and good luck trying to replicate that setup perfectly every single time.

This is where Infrastructure as Code (IaC) comes in and completely changes the game. IaC lets you treat your infrastructure - your servers, networks, and storage - exactly like you treat your application code. You define everything in simple, human-readable configuration files that can be versioned, shared, and reused.

Terraform has become one of the go-to tools for IaC because it's incredibly good at this. It uses a declarative approach. Instead of writing out every single step for how to build something, you simply define the final state you want - the 'what'. Terraform is smart enough to figure out the most efficient way to get you there.

The Problem with Clicking Around in the Console

Let's imagine a common scenario. You've spent a day manually configuring a web server, a database, and a load balancer in the AWS console. A few weeks later, a teammate needs to fix an urgent issue and makes a quick change directly in the console.

That undocumented change just created what we call configuration drift. Your actual, running infrastructure no longer matches your documentation or what you think you have. This is a huge source of unexpected outages and security vulnerabilities.

How Terraform Solves This

Terraform brings sanity to this chaos by making your code the single source of truth. It's a fundamentally better approach, and it doesn't matter which cloud you're on after learning how to choose a cloud provider.

- Repeatability: Need an identical environment for development, staging, or production? Just run one command. Done.

- Accountability: Every change is tracked in version control (like Git). You always have a clear audit trail of who changed what, when, and why.

- Safety: The

terraform plancommand is your safety net. It shows you exactly what changes will be made before you apply them, preventing costly mistakes.

Here's a quick look at how the two approaches stack up.

Manual Infrastructure vs Terraform (IaC)

| Aspect | Manual Management (Clicking in Console) | Terraform (Infrastructure as Code) |

|---|---|---|

| Speed | Slow, tedious, and requires manual repetition for each environment. | Fast and automated. A single command can deploy complex setups. |

| Consistency | Highly prone to human error and configuration drift. | Guarantees consistent environments every time the code is applied. |

| Versioning | No built-in versioning. Changes are hard to track and revert. | Uses version control (Git), providing a full history of changes. |

| Collaboration | Difficult for teams to work on infrastructure simultaneously. | Easy for teams to collaborate, review, and approve changes. |

| Documentation | Documentation is separate and often becomes outdated. | The code itself is the documentation - always up-to-date. |

| Scalability | Scaling requires significant manual effort and time. | Easily scale infrastructure up or down by changing a few lines of code. |

It's clear why IaC has become a cornerstone of modern DevOps.

By 2025, over 78% of organizations will have integrated DevOps practices into their workflows. This isn't just a trend; it results in real gains like 46 times more frequent code deployments and a 96 times faster recovery from failures.

Managing infrastructure with code allows teams to build far more reliable, secure, and scalable systems. If you're curious about how Terraform fits into the broader landscape, this comparative analysis of IaC tools including Terraform offers some great perspective. This foundation is exactly why getting comfortable with Terraform is such a valuable skill to have.

Preparing Your Local Development Environment

Alright, this is where the fun really starts. Before you can write a single line of code to build your infrastructure, you need to get your local machine set up with the right tools. The most important piece of the puzzle is the Terraform Command Line Interface (CLI).

First things first, head over to the official HashiCorp website and download the Terraform binary. They have packages for Windows, macOS, and Linux, so no matter what you're running, you're covered. Once it's downloaded, you just need to pop the executable into a directory that's included in your system's PATH. This little step is what lets you run terraform commands from any terminal, anywhere on your machine.

Supercharge Your Editor with an Extension

You could write Terraform code in a simple text editor like Notepad, but trust me, you'll be making your life much harder than it needs to be. A proper code editor with dedicated Terraform support is a game-changer. My personal recommendation, and a favorite in the DevOps world, is Visual Studio Code (VS Code).

The official HashiCorp Terraform extension for VS Code is a must-have. It adds a ton of features that catch common mistakes before they become headaches.

- Syntax Highlighting: This is huge for readability. It colors your providers, resources, and variables, making the code much easier to scan and understand.

- Intelligent Autocompletion: As you start typing, it will suggest resource types and their required arguments. This saves you countless trips back and forth to the documentation.

- Built-in Code Formatting: Keeps your

.tffiles neat and tidy, ensuring consistent styling across your project with a simple keystroke.

Here's a quick look at the official extension on the VS Code Marketplace.

Grabbing this free extension will seriously boost your productivity and help you sidestep simple syntax errors, which is a massive win when you're just getting your feet wet.

Connect Securely to Your Cloud Provider

With the CLI and your editor ready to go, the final piece is connecting Terraform to your cloud account. Terraform needs credentials to talk to the cloud provider's API on your behalf - it's how it creates, updates, and deletes all those resources. For this guide, we'll use AWS as our example.

Pro Tip: The best and most secure way to do this is by creating a dedicated IAM (Identity and Access Management) user in AWS just for Terraform. Never, ever use your root account credentials. Grant this new user only the specific permissions it needs to manage your infrastructure, sticking to the principle of least privilege.

After creating the IAM user, AWS will give you an Access Key ID and a Secret Access Key. The easiest way to configure these is with the AWS CLI. Just run the aws configure command in your terminal and plug in your keys. This command stores them securely in a local file, and Terraform is smart enough to find and use them automatically. This is a crucial step for keeping your sensitive credentials out of your actual configuration files.

Treating this setup process methodically is key, just as any good getting started guide would advise. Now that your environment is fully prepared, you're officially ready to write your first piece of infrastructure as code.

Writing Your First Real Terraform Configuration

Alright, with Terraform installed, it's time for the fun part: writing some code to build actual infrastructure. This is where the theory ends and the hands-on work begins. We're going to walk through the structure of a Terraform file, block by block, so you know exactly what each part does and why it's there.

First things first, all Terraform files end with the .tf extension. They're written in a language called HashiCorp Configuration Language (HCL), which was specifically designed to be easy for humans to read and for machines to parse. You'll find the syntax is pretty clean - it uses blocks, arguments, and expressions to define everything.

The Essential Building Blocks

When you open up a Terraform file, you'll almost always see three main types of blocks, especially when you're just starting out. Let's get familiar with them before we write our own.

terraformblock: This is where you configure Terraform itself. Think of it as the settings menu. You'll use it to tell Terraform which providers (like AWS or Azure) you need and which versions of those providers are compatible with your code. This is super important for making sure your configurations work consistently everywhere.providerblock: This block is your connection to a specific cloud or service. It tells Terraform, "Hey, I want to talk to AWS," and lets you set crucial details like which region to build your resources in (e.g.,us-east-1).resourceblock: This is where the magic happens. Aresourceblock represents a tangible piece of infrastructure - a virtual server, a database, or, in our case, a simple file storage bucket.

That provider block is a bigger deal than it looks. To give you some perspective, by mid-2025, the Terraform AWS provider had already been downloaded over 4 billion times. That's not a typo. It's the critical piece that translates your code into real AWS resources, and learning to use it well is an incredibly valuable skill. You can actually read more about the AWS provider's journey over on HashiCorp's blog.

Creating Your First AWS S3 Bucket

Let's build something practical that you'll use all the time: an AWS S3 bucket. It's a foundational cloud resource and the perfect "Hello, World!" for infrastructure.

Go ahead and create a new file named main.tf and paste this code inside.

terraform { required_providers { aws = { source = "hashicorp/aws" version = "~> 5.0" } } }

provider "aws" { region = "us-east-1" }

resource "aws_s3_bucket" "my_first_app_bucket" { bucket = "my-unique-app-bucket-name-12345"

tags = { Name = "My first app bucket" Environment = "Dev" ManagedBy = "Terraform" } }

So, what's this code actually doing? The resource block declares we want a resource of type aws_s3_bucket and we're giving it a local name, my_first_app_bucket. This local name is just for us - it's how we'll refer to this specific bucket inside our Terraform project if we need to.

Key Takeaway: The resource type (

aws_s3_bucket) is defined by the provider and has to be exact. The local name (my_first_app_bucket) is completely your choice and is only used for reference within your code.

Inside the block, the bucket argument sets the globally unique name for our S3 bucket. Remember, S3 bucket names have to be unique across all of AWS, so you'll need to change "my-unique-app-bucket-name-12345" to something personal to you.

Finally, the tags block is a map of key-value pairs we're attaching to our bucket. This is a non-negotiable best practice in any real-world cloud environment for keeping your resources organized. This simple structure is the foundation of all your future work with Infrastructure as Code, a concept we dive into much deeper in other articles.

Mastering the Core Terraform Workflow

Alright, you've written your first bit of Terraform code. Now for the exciting part - turning that code into actual, tangible infrastructure. This is where the magic really happens.

The entire process boils down to a core, three-step workflow that you'll run over and over again in your career: init, plan, and apply. Think of it as a reliable rhythm for managing your cloud resources.

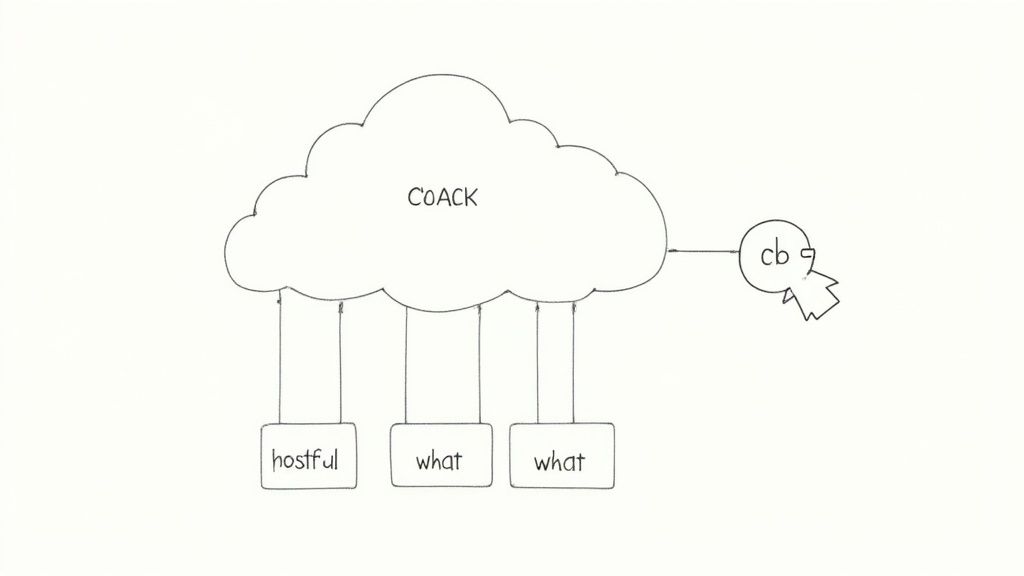

The infographic below gives you a bird's-eye view of how your configuration file tells providers what to build.

Essentially, your code is the blueprint. The provider is the construction crew. Let's walk through each command and bring our S3 bucket to life.

The First Command: terraform init

Before you can do anything else, you have to initialize your project directory. This is a one-time setup command you run for every new Terraform project you start.

When you run terraform init, a few important things happen in the background:

- It downloads the right tools: Terraform scans your

.tffile, sees thehashicorp/awsprovider block, and downloads the specific plugin needed to communicate with AWS. - It prepares your state: For now, it sets up your local machine to store the "state file," which is how Terraform keeps track of the resources it manages.

- It grabs any modules: If your project used reusable modules,

initwould download them during this step.

Just pop open your terminal, make sure you're in the same directory as your main.tf file, and run the command. You should see a success message letting you know the project is ready to go.

Your Safety Net: terraform plan

This next command is, without a doubt, the most critical one in the entire workflow. I can't stress this enough. terraform plan is your dry run. It gives you a preview of exactly what changes Terraform is about to make, without actually doing anything.

Think of it as your last line of defense against mistakes.

When you run it, Terraform checks the current state of your AWS account and compares it against the desired state you defined in your code. It then generates a clear execution plan, using symbols to show what will happen:

+(green): A new resource is going to be created.-(red): An existing resource is going to be destroyed.~(yellow): A resource will be modified in-place.

Get into the habit of carefully reading every single line of the plan output. This is your chance to catch a typo that might accidentally delete a production database or create an oversized, expensive virtual machine.

Go ahead and run terraform plan. The output will tell you it plans to add one new resource: our aws_s3_bucket.

Bringing It to Life: terraform apply

Once you've double-checked the plan and everything looks correct, it's time to make it happen. The terraform apply command is what actually executes the changes.

As a final confirmation, it will show you the exact same plan again and ask for your approval.

Type yes and hit Enter.

You'll see Terraform communicating with the AWS API in real-time as it creates your S3 bucket. A few moments later, it will confirm the apply is complete. This kind of structured, automated deployment is a cornerstone of modern DevOps, a concept you'll also find at the heart of our comprehensive Azure DevOps tutorial.

Now for the payoff. Head over to your AWS Management Console, go to the S3 service, and you should see your brand-new bucket waiting for you, complete with the tags you defined. That's the "aha!" moment - your code is now a real thing in the cloud.

Managing Infrastructure State and Day Two Operations

Getting that first resource up and running is a great feeling, but the real magic of Terraform kicks in with what happens next. Infrastructure isn't a "set it and forget it" deal. It's a living thing that needs constant updates, tweaks, and eventually, a proper retirement. This is where we step out of "day one" setup and into the world of "day two" operations.

The core of this whole process is a file called terraform.tfstate. The moment you successfully run terraform apply, this JSON file pops into your project directory. Think of it as Terraform's brain - a detailed map that connects the configuration you wrote to the actual, live resources in your cloud account.

The state file is Terraform's single source of truth. It tracks resource IDs and attributes so Terraform knows precisely what it's supposed to be managing. Without this file, Terraform would have no idea what it built.

A quick word of caution: this file can hold sensitive data. For now, it's just sitting on your local machine, which is fine for learning. But once you start working with a team, you'll need to store it in a secure, shared location called a remote backend.

Modifying and Updating Infrastructure

Let's put this into practice. What happens when you need to change something? Imagine your finance team just told you that all resources need a new tag for cost-tracking.

Your first instinct might be to log into the AWS console and add it manually. Don't! The whole point of Infrastructure as Code is to manage everything from your code.

Simply open up main.tf and make the change directly.

resource "aws_s3_bucket" "my_first_app_bucket" { bucket = "my-unique-app-bucket-name-12345"

tags = { Name = "My first app bucket" Environment = "Dev" ManagedBy = "Terraform" CostCenter = "Project-Alpha" # Our new tag } }

Save the file and run terraform plan again. Terraform is smart. It reads your updated code, compares it against the terraform.tfstate file to see what currently exists, and pinpoints the exact difference. The plan will show one resource to be changed (~) and will highlight that only the tags are being updated.

Now, run terraform apply and type yes when prompted. Terraform makes that one specific change, leaving everything else untouched. This precise, predictable update process is exactly why a code-first approach is so powerful.

Cleaning Up with Terraform Destroy

When you're finished with your infrastructure, you need an easy way to tear it all down so you don't get a surprise bill at the end of the month.

This is where the terraform destroy command saves the day.

Running it gives you a "reverse" plan, showing every resource that will be deleted, marked with a red minus sign (-). This is your last chance to double-check everything before it's gone for good. Once you confirm, Terraform goes to work, methodically removing every piece of infrastructure it created. Your cloud account goes back to the way it was before you started, completing the full infrastructure lifecycle.

Common Questions from New Terraform Users

When you're just starting your journey with Terraform, a few questions pop up again and again. Let's tackle them head-on, because understanding these concepts early will save you a ton of headaches down the road.

What's the Real Difference Between Terraform and Ansible?

This is easily the most frequent point of confusion, but the distinction is pretty clear once you see it. Think of it like building a brand new restaurant.

Terraform is your general contractor. It handles the heavy lifting of provisioning the core infrastructure - it pours the foundation, raises the building, and installs the plumbing and electrical systems. You give it the blueprints (your code), and it constructs the physical space.

Ansible, in contrast, is your kitchen and front-of-house outfitter. It comes in after the building is up. It installs the ovens, sets up the point-of-sale systems, and arranges the tables and chairs. It configures the software and services running inside your servers.

They aren't competitors; they're collaborators. A common and powerful pattern is to use Terraform to spin up the servers and then have Ansible take over to configure them perfectly.

Can I Use Terraform with Clouds Other Than AWS?

Absolutely. This is one of the main reasons so many people love Terraform. While our guide focuses on AWS, Terraform itself doesn't play favorites. It's designed to be cloud-agnostic.

The magic behind this flexibility lies in a system of plugins called providers.

There's a massive ecosystem of providers for just about anything you can imagine, including all the major cloud platforms:

- Microsoft Azure

- Google Cloud Platform (GCP)

- Oracle Cloud Infrastructure (OCI)

And it doesn't stop there. You'll find providers for Kubernetes, Cloudflare, Datadog, and hundreds more. The best part? Your core workflow (init, plan, apply) stays exactly the same. You just point your configuration to a different provider.

Is the Terraform State File Sensitive?

Yes, 100%. You must treat the terraform.tfstate file as if it contains secret keys and passwords, because sometimes it does. This file is the single source of truth that maps your code to the actual resources running in the cloud.

As your infrastructure gets more complex, this file will store resource IDs, IP addresses, and potentially even data you've passed in.

Your state file is a detailed map of your live infrastructure, including sensitive data. It should never be committed to a public Git repository.

When you're just learning on your own machine, a local state file is fine. But the moment you start working with a team, the standard is to move to a secure remote backend. This means storing the state file somewhere safe and shared, like an AWS S3 bucket, which also provides state locking to stop teammates from accidentally overwriting each other's changes.

At Pratt Solutions, we live and breathe this stuff, building solid cloud infrastructure and automation for our clients using tools just like Terraform. If your team needs a hand leveling up its cloud game, check out our custom solutions and consulting services. Visit us at john-pratt.com to see how we can help you build better, faster.