A Modern Devops Tools Comparison

#devops-tools-comparison#ci/cd-tools#infrastructure-as-code#devops-platforms#monitoring-tools

November 22, 2025

Picking the right DevOps tools isn't just a technical task - it's a strategic move that directly shapes your team's speed, reliability, and overall efficiency. The core choice you'll face in any devops tools comparison boils down to a classic dilemma: do you go for specialized, best-in-class tools, or do you opt for an integrated, all-in-one platform? The answer really depends on what you value more: deep, granular control or a simple, unified workflow.

Navigating the Modern DevOps Landscape

Let's be clear: the "best" DevOps toolchain is entirely dependent on your context. What works brilliantly for a five-person startup will almost certainly grind a 500-person enterprise to a halt. This guide cuts through the marketing noise and gets into a more nuanced analysis of the tools that matter across the most critical categories.

My goal here is to give you practical criteria for building a toolchain that actually works together. You want tools that genuinely speed up software delivery, not ones that introduce new bottlenecks.

Core DevOps Tool Categories

First, you have to understand the map. The landscape is best understood by breaking it down into distinct functional areas. Each category solves a specific problem in the software delivery lifecycle, but you'll notice many tools have features that bleed into other areas. A smart DevOps tools comparison looks at how these categories and tools fit together.

- CI/CD & Automation: These are the engines of your delivery pipeline, responsible for automatically building, testing, and deploying your code.

- Infrastructure as Code (IaC): This is all about defining and managing your servers, networks, and databases through code instead of manual configuration.

- Containerization & Orchestration: These tools help you package applications into lightweight containers and then manage them at scale.

- Monitoring & Observability: You can't fix what you can't see. These platforms are for tracking system health, performance metrics, and application logs.

Key Factors Driving Your Decision

Before you even start looking at specific tools, you need to look inward at your organization's unique DNA. The perfect choice for another company could be a complete disaster for yours. Think hard about your team size, your current tech stack, future growth plans, and, of course, your budget.

The most effective DevOps strategy isn't about adopting the most popular tools, but about assembling a toolchain that creates a seamless, low-friction path from code commit to production value.

For instance, a team that lives and breathes the Atlassian ecosystem might find Bitbucket Pipelines to be a no-brainer. On the other hand, a startup that needs to move fast might prefer the simplicity and tight integration of GitHub Actions.

| Factor | Description | Implication for Tool Choice |

|---|---|---|

| Team Size | The number of developers and operators who will use the tools. | Larger teams often need enterprise-grade features like RBAC and detailed audit logs. |

| Tech Stack | Your current programming languages, frameworks, and cloud provider. | Tools with native integrations into your existing stack will save you a ton of setup pain. |

| Scalability | The need for tools to grow as your application and team expand. | Choose tools that can handle increased load without forcing a painful re-architecture down the line. |

How to Choose Your DevOps Tools: A Practical Framework

Diving into a DevOps tools comparison without a clear plan is a recipe for disaster. It's easy to get sidetracked by flashy features and marketing hype, only to end up with a tool that doesn't actually solve your team's core problems. Before you even look at a single product, you need to build your own evaluation framework. Think of it as your North Star, guiding you to the right choice.

What works for a two-person startup building their first CI pipeline is completely different from what a heavily regulated enterprise needs to orchestrate hundreds of microservices. Your specific context - the skills on your team, the scale of your projects, and your security posture - has to be the starting point for every decision.

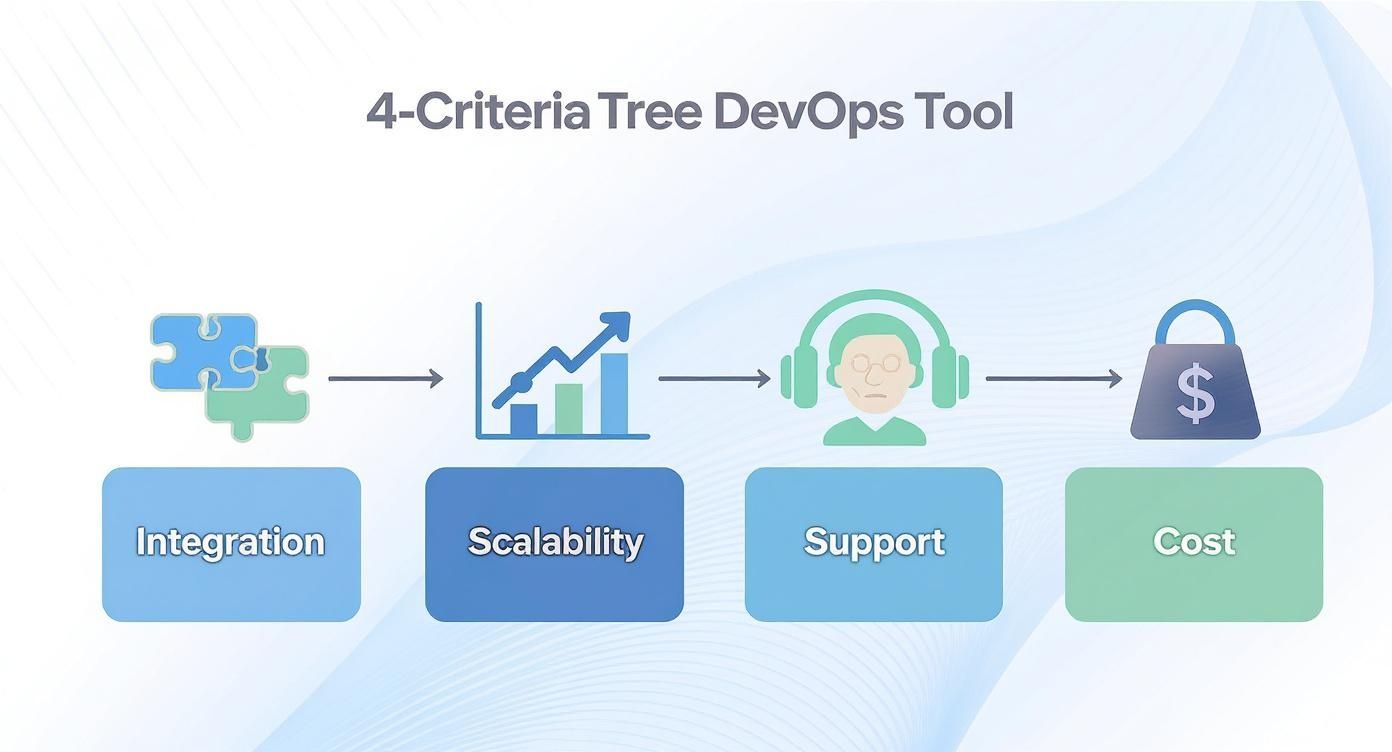

Map Out Your Integration and Ecosystem Needs

No DevOps tool is an island. Its real value comes from how smoothly it plugs into the rest of your stack. A tool with a thriving ecosystem of integrations can save you hundreds of hours that would otherwise be spent on custom scripts and maintenance headaches. Ask yourself: how will this new tool talk to our source code repository, our artifact manager, and our cloud provider?

Look at the quality and variety of available plugins and connectors. A busy marketplace or a well-documented API is a great sign that a tool is built to adapt. This future-proofs your decision, ensuring that as your stack changes, your toolchain can change with it, preventing you from getting locked into a dead-end system.

A tool's worth isn't just in its own features, but in how it enhances the tools around it. You're building a cohesive ecosystem, not just collecting a bunch of powerful but separate tools.

Check for Scalability and Real-World Performance

Scalability is more than just handling a bigger codebase or more users. It's about how a tool holds up as your organization itself gets more complex. Will it work for one project today, but also scale to support fifty teams next year without grinding to a halt?

Look for features that are designed for growth:

- Role-Based Access Control (RBAC): This is non-negotiable for managing permissions as your team expands.

- High Availability and Clustering: You can't let your core tools become a single point of failure.

- Performance Under Load: How does it behave when running dozens of concurrent pipelines, builds, or infrastructure deployments?

A tool that's a breeze to set up for a small team can quickly become an operational nightmare at scale. Always plan for where you're going, not just where you are today.

Weigh Commercial Support vs. Community Strength

When things inevitably break at 2 a.m., the quality of the support you have access to makes all the difference. This support usually comes in two flavors: official paid support and the broader open-source community. For any mission-critical system, having a commercial support contract with a guaranteed service-level agreement (SLA) is often a must-have.

At the same time, a vibrant community is an incredible resource, offering a massive library of forums, tutorials, and ready-made solutions. The immense popularity of certain tools is a direct reflection of their community's strength. For example, Docker is used by 92% of organizations for containerization, while Kubernetes is the choice for 85% in orchestration. In CI/CD, Jenkins still holds a 46.35% market share, and for IaC, Terraform leads with 41% adoption. This widespread use means better community support and a larger talent pool to hire from - a crucial factor you can dig into with these key DevOps statistics and trends.

Calculate the True Cost and Security Impact

Finally, you have to look past the sticker price. The Total Cost of Ownership (TCO) is what really matters. This includes not just licensing fees, but also the infrastructure costs to run the tool and, most importantly, the engineering time spent on maintenance, configuration, and training. An open-source tool might be "free" to download, but the operational overhead can easily eclipse the cost of a managed commercial service.

Security can never be an afterthought. Dig into a tool's built-in security capabilities, like secrets management, integrations for vulnerability scanning, and detailed audit logs. Does it help you meet your compliance needs, whether that's SOC 2, HIPAA, or GDPR? A tool that forces you to create security workarounds isn't a tool - it's a ticking time bomb.

Comparing CI/CD and Automation Platforms

Your Continuous Integration and Continuous Delivery (CI/CD) platform is the engine of your entire DevOps practice. It's what turns every code commit into a tangible, deployed application, making it one of the most consequential decisions you'll make for your toolchain. When we do a devops tools comparison in this space, it almost always boils down to three major players: the old guard, Jenkins; the all-in-one platform, GitLab CI; and the developer-focused newcomer, GitHub Actions.

The choice between them really hinges on a core philosophy. Are you looking for ultimate, unbound flexibility with a massive community, or do you value a seamless, integrated experience that just works out of the box? To get the most out of this comparison, a good baseline knowledge of workflow automation helps, which you can find in this complete guide to workflow automation software.

This decision often comes down to balancing four key criteria for any tool you're evaluating.

As you can see, the "best" choice is completely situational. It depends entirely on whether you need to plug into a sprawling, diverse tech stack or scale up your builds with costs that don't spiral out of control.

Jenkins: The Customizable Workhorse

Jenkins is the original open-source automation server, and its age is its greatest asset. Its strength is, and always has been, its incredible flexibility. With a plugin library that numbers in the thousands, you can bend Jenkins to do just about anything. If you've got a weird, complex, or legacy workflow, chances are Jenkins can handle it.

But all that power comes with a price. Jenkins demands significant setup and constant care. You're on the hook for managing everything: the controller, the agents, the plugins, and all the underlying infrastructure. In bigger companies, this can easily become a full-time job for a whole team, requiring deep expertise just to keep things secure and running smoothly.

- Best For: Teams with highly custom, non-standard pipelines or those needing to integrate with a wide array of on-premise or legacy systems.

- Pipeline-as-Code: Jenkins uses a Groovy-based Domain-Specific Language (DSL) in a

Jenkinsfile. This gives you immense programmatic control but also comes with a much steeper learning curve than simple YAML. - Scalability: It can scale to massive workloads, but getting there requires serious architectural planning. Think carefully managed Kubernetes deployments and sophisticated agent provisioning strategies.

GitLab CI: The All-In-One Platform

GitLab CI flips the script completely by embedding CI/CD directly into its source code management platform. If your team is already using GitLab for its repositories, the experience is incredibly streamlined. There's no separate tool to install, manage, or secure - it's just there, part of one unified interface.

The main draw here is simplicity. You define your pipelines in a clean, straightforward YAML file (.gitlab-ci.yml) right inside your repo, making it incredibly easy for developers to jump in. GitLab can manage the runners (the build agents) for you, or you can host your own if you need more control or specific hardware.

GitLab CI's entire value proposition is about crushing toolchain complexity. By bundling source control, CI/CD, package registries, and security scanning into a single product, it completely eliminates the "integration tax" teams pay when trying to stitch together a dozen different tools.

Of course, this all-in-one model isn't for everyone. If your organization prefers to pick best-of-breed tools for each job - like using GitHub for source control and Artifactory for artifacts - bolting on GitLab CI can feel redundant and create friction. For a more detailed breakdown, our comprehensive CI/CD tools comparison at https://www.john-pratt.com/cicd-tools-comparison/ offers a deeper dive.

GitHub Actions: The Developer-First Experience

GitHub Actions has exploded in popularity, and for good reason. It's woven directly into the fabric of the world's largest code hosting platform. Much like GitLab CI, it uses a simple YAML syntax (in the .github/workflows/ directory) and lives right next to your code, which makes it feel incredibly natural for developers.

Its killer feature is the Actions Marketplace, a massive and growing ecosystem of reusable workflows built by the community and vendors. This lets you assemble powerful pipelines in minutes by grabbing pre-built Actions for common tasks, like deploying to AWS, scanning for vulnerabilities with Snyk, or publishing a package to npm.

- Ease of Setup: For anyone already on GitHub, getting started is almost trivial. You can enable Actions and have a basic pipeline running in minutes.

- Community Support: The marketplace is its biggest superpower, offering thousands of building blocks that dramatically speed up pipeline development.

- Cost Model: GitHub is very generous with its free tier for public repos and has a simple pay-as-you-go model for private ones. This can be extremely cost-effective for smaller teams or projects with infrequent builds.

The trade-off? For highly complex, enterprise-grade release orchestration, its capabilities can feel a bit less mature than the programmatic control you get with Jenkins. It's fundamentally built around event-driven workflows that start inside GitHub (like a push or pull_request), which can make it clunky for handling external triggers or more intricate, multi-stage release processes.

To make the choice clearer, here's a quick summary of how these platforms compare for different needs.

CI/CD Platform At-a-Glance Comparison

| Tool | Best For | Configuration Style | Integration Strength | Key Differentiator |

|---|---|---|---|---|

| Jenkins | Organizations with complex, bespoke, or legacy workflows. | Groovy Jenkinsfile (programmatic) |

Unmatched via thousands of plugins for nearly any tool. | Ultimate flexibility and customizability. |

| GitLab CI | Teams seeking a single, unified platform for the entire SDLC. | YAML .gitlab-ci.yml (declarative) |

Excellent within the GitLab ecosystem (SCM, registry, security). | Seamless all-in-one developer experience. |

| GitHub Actions | Developer-centric teams wanting fast, easy, and community-driven CI. | YAML .github/workflows/ (declarative) |

Massive ecosystem via the Actions Marketplace. | Developer-first workflow and a vibrant community marketplace. |

Ultimately, the right tool aligns with your team's existing workflow, technical expertise, and overall philosophy on how to build and ship software. Each of these three can be a fantastic choice in the right context.

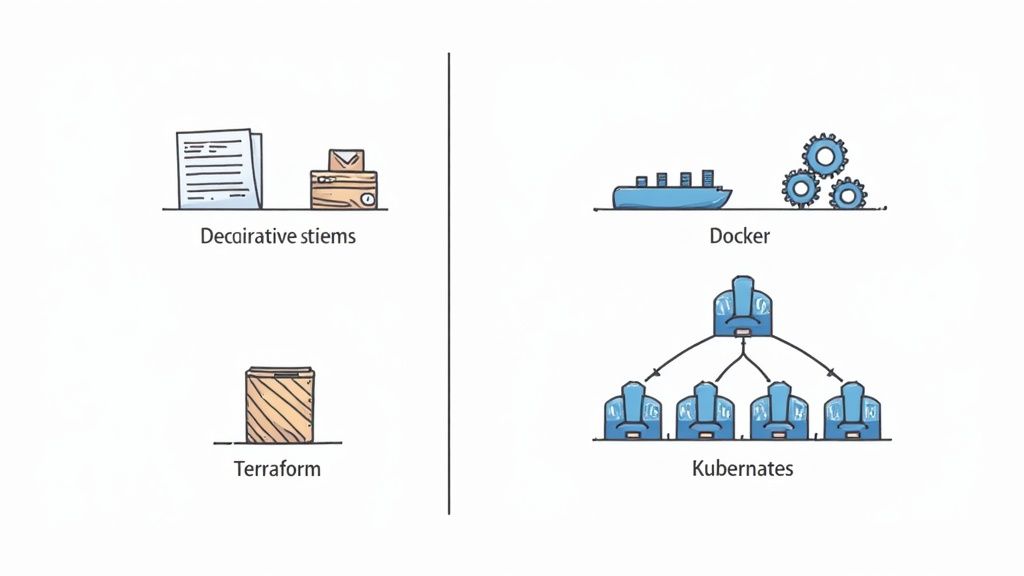

Analyzing IaC and Containerization Tools

Infrastructure as Code (IaC) and containerization are really the two bedrock principles for building and shipping modern software. When we compare tools in this space, it's not about finding one ultimate winner. It's about understanding how different tools solve different parts of the puzzle - often working together. Let's break down the core philosophies behind the major players so you can build a stable, automated foundation for your apps.

We're going to focus on two classic matchups: Terraform versus Ansible for managing infrastructure, and Docker versus Kubernetes for packaging and running applications. Getting their distinct roles straight is the first step to designing a DevOps workflow that actually works.

Terraform vs. Ansible: A Tale of Two Philosophies

On the surface, Terraform and Ansible look like they do the same thing, but they come at the problem from completely different angles. Terraform is a declarative tool built for infrastructure provisioning. You simply tell it what you want your cloud environment to look like - say, "I need three EC2 instances and a load balancer" - and Terraform's engine figures out the most efficient way to get there.

Ansible, on the other hand, is a procedural tool that's primarily about configuration management. It follows a recipe, executing a specific sequence of tasks to set up existing servers. With Ansible, you tell it how to get to the desired state, with commands like, "first install Apache, then copy this config file, and finally restart the service."

This is the key difference that defines where each tool fits best. You use Terraform to build the house (provisioning servers, networks, and databases) and Ansible to furnish it (installing software, applying security patches, and managing users).

A powerful and incredibly common pattern is to use Terraform to stand up the raw infrastructure, then pass the new server IPs to Ansible to handle the detailed configuration. This approach plays to the strengths of both tools, neatly separating the state of your infrastructure from the configuration of your machines.

To get a better handle on the strategy behind IaC, it's worth reading through these 10 Infrastructure as Code best practices.

When to Choose Terraform

Terraform really comes into its own when you're managing the lifecycle of cloud infrastructure, especially if you're working in a multi-cloud or hybrid setup. Its state file acts as a single source of truth, allowing it to plan and apply changes with predictable results.

- Multi-Cloud Deployments: Its provider-agnostic design makes it the default choice for managing resources across AWS, Azure, and GCP from a single codebase.

- Immutable Infrastructure: Terraform is built for creating and destroying resources from scratch, which is a core concept in modern infrastructure management.

- Complex Dependency Management: It automatically maps out dependencies between your resources, so it knows to create a virtual network before trying to launch a VM inside it.

If your team is just starting out with IaC, the best thing to do is dive in. This practical Terraform tutorial for beginners is a great place to start building those fundamental skills.

When to Choose Ansible

Ansible is the perfect fit for environments where you need to manage the configuration of existing, mutable infrastructure or run ordered, step-by-step tasks. Its agentless design, which just uses standard SSH, makes it ridiculously easy to get started with.

- Application Deployment: It's fantastic for orchestrating multi-tier application deployments where specific steps must happen in a precise sequence.

- Configuration Drift Management: You can run it repeatedly to nudge systems back into their desired state, correcting any manual changes or "drift" that has occurred.

- Zero-Downtime Rolling Updates: Ansible's procedural control makes it great for coordinating complex update sequences across a fleet of servers without taking everything offline.

Docker and Kubernetes: The Symbiotic Duo

The "Docker vs. Kubernetes" debate is a common point of confusion. The truth is, they aren't competitors. They are complementary technologies that form a powerful partnership. Docker gives you a standard package for your application, and Kubernetes provides the brain to run and manage those packages at scale.

Docker is what you use to build and run individual containers. It solves the age-old "it works on my machine" problem by bundling your application's code, runtime, and all its dependencies into a single, self-contained, portable image. For a small project, you might get by just fine with Docker and Docker Compose to manage a handful of containers on one machine.

Kubernetes, however, is a full-blown container orchestrator. It's what you need when you're trying to run hundreds or thousands of containers across a whole cluster of machines. Kubernetes steps in to handle the complex, painful tasks that are simply impossible to manage by hand.

- Service Discovery and Load Balancing: It automatically directs network traffic to healthy containers.

- Automated Rollouts and Rollbacks: It manages application updates gracefully, enabling zero-downtime deployments.

- Self-Healing: If a container crashes, Kubernetes restarts it. If a whole server dies, it reschedules the containers onto healthy nodes.

- Secret and Configuration Management: It lets you manage sensitive data like passwords and API keys securely, without hardcoding them into your container images.

In a typical workflow, a developer writes a Dockerfile to build an application image. That image gets pushed to a registry. From there, Kubernetes pulls the image and deploys it as a container across the cluster, making sure it stays running, scaled, and available according to the rules you've defined. You start with Docker, but you need an orchestrator like Kubernetes to achieve real production resilience.

Choosing Your Monitoring and Observability Stack

Once your applications are live, you're flying blind without a way to see what's happening under the hood. A solid monitoring and observability stack is non-negotiable for understanding the health and performance of your systems. In this part of our DevOps tools comparison, we'll dig into three of the most common approaches teams consider.

We're going to look at the open-source classic, Prometheus and Grafana, then pivot to the log-focused ELK Stack, and finally, the all-in-one SaaS option, Datadog. Each has its own philosophy, and the best fit for you really depends on what problems you're trying to solve.

The need for clear visibility is a huge factor in the DevOps market's growth. Valued at $12.54 billion in 2024, it's projected to climb to $14.95 billion by 2025. You can get more details on the DevOps automation tools market size and its trajectory on SSOjet.

Prometheus and Grafana for Metrics-Driven Monitoring

If your team lives and breathes in a cloud-native world, especially with Kubernetes, the combination of Prometheus and Grafana is probably your go-to starting point. Prometheus is brilliantly simple in its focus: it collects and stores time-series data - metrics. Using a pull-based model, it scrapes these metrics from your services at regular intervals.

That's where Grafana comes in. It takes the raw numbers from Prometheus and transforms them into beautiful, insightful dashboards. It's the visualizer that lets you see performance trends, system health, and application behavior. This duo is unbeatable for metrics-driven alerting; when CPU usage spikes or error rates cross a line, you'll know instantly.

- Best For: Engineering teams who need powerful, real-time metrics and alerts, with the freedom to build any dashboard they can imagine.

- Key Strength: Its tight integration with the cloud-native ecosystem makes it the de facto standard for monitoring containerized workloads in Kubernetes.

- Consideration: This isn't a managed service. You are responsible for hosting, managing, and scaling both tools, which adds to your operational workload.

The ELK Stack for Deep Log Analysis

While Prometheus tells you what is happening with metrics, the ELK Stack - Elasticsearch, Logstash, and Kibana - helps you understand why it's happening through logs. This stack was built from the ground up to aggregate, search, and analyze enormous volumes of log data from every corner of your infrastructure.

Elasticsearch acts as the powerful search and analytics engine. Logstash is the workhorse that ingests and processes the logs, and Kibana is the front-end for searching and visualizing it all. When a developer is hunting down a tricky production bug, sifting through structured logs in Kibana is often the quickest path to a root cause. This kind of deep diagnostic power is a critical feature that many specialized database performance monitoring tools also rely on.

Datadog offers a unified, out-of-the-box experience that abstracts away complexity, making it ideal for teams who value speed and simplicity. In contrast, the Prometheus and Grafana stack provides unparalleled customization and control, perfect for teams that want to build a bespoke monitoring solution from the ground up.

Datadog as a Unified Observability Platform

Datadog takes a completely different angle. It's a single, unified SaaS platform that pulls together metrics, logs, and application performance monitoring (APM) traces into one place. Instead of you having to stitch together different tools, Datadog gives you a cohesive experience where you can jump from a high-level dashboard metric straight down to the specific logs and code traces behind it.

This convenience is its biggest selling point. With over 700 integrations, you can get visibility into common technologies incredibly quickly. But this all-in-one power comes with a price tag. Datadog's pricing can be complex and can get very expensive as your infrastructure and data volumes grow.

Got Questions About Picking DevOps Tools?

It's easy to get lost in feature-by-feature comparisons, but choosing the right DevOps tools often comes down to bigger, more strategic questions. Figuring out your team's philosophy on toolchains is just as important as the tools themselves. It's a constant balancing act between flexibility, cost, and the sheer effort of keeping everything running smoothly.

A question I hear all the time is whether to go with an all-in-one platform like GitLab or to build a custom "best-of-breed" toolchain. Going with a single, integrated platform definitely simplifies things. You have one vendor to deal with and less time spent on integration headaches, which is great for teams who just want a straightforward workflow that works.

On the flip side, the best-of-breed approach gives you the freedom to pick the absolute best tool for every single job. This can give you a lot more power and flexibility, but be prepared - it also means you're responsible for making all those different pieces talk to each other.

The Open Source vs. Commercial Debate

Another classic dilemma is choosing between open-source tools and commercial SaaS products. Open-source champions like Jenkins or Prometheus offer incredible control and have no licensing fees, but they don't run themselves. The responsibility for setup, maintenance, scaling, and security falls entirely on your team, which can create a lot of hidden operational costs.

The "free" in open-source software is about freedom, not a free lunch. Your total cost of ownership often includes a hefty investment in engineering hours for maintenance, security, and just keeping the lights on.

Commercial tools, like Datadog or GitHub Actions, offer a different trade-off. You give up some fine-grained control in exchange for convenience and dedicated support. They handle the infrastructure for you, which means a faster start and a service-level agreement (SLA) you can count on. This is usually the better path for teams who'd rather spend their time building features, not managing internal tools.

Ultimately, any devops tools comparison comes down to your team's skills, budget, and what you're trying to achieve.

At Pratt Solutions, we live and breathe this stuff. We specialize in crafting and implementing DevOps toolchains that fit your specific needs, whether you're starting from scratch with a new CI/CD pipeline or looking to get more out of your cloud setup. We offer expert consulting to help you ship software faster.

Find out more about our custom cloud and automation services.